| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 |

- 코딩 테스트

- Class activation map

- 코딩테스트

- Deep learning

- Cam

- 기계학습

- AI

- 인공지능

- GAN

- Score-CAM

- 메타러닝

- Artificial Intelligence

- Explainable AI

- Machine Learning

- meta-learning

- 딥러닝

- SmoothGrad

- keras

- 설명가능한 인공지능

- Unsupervised learning

- 머신러닝

- cs231n

- xai

- 백준

- python

- Interpretability

- grad-cam

- 시계열 분석

- coding test

- 설명가능한

- Today

- Total

목록분류 전체보기 (125)

iMTE

Sanity checks for saliency maps 내용정리 [XAI-6 (2)]

Sanity checks for saliency maps 내용정리 [XAI-6 (2)]

논문 제목 : Sanity checks for saliency maps 논문 주소 : arxiv.org/abs/1810.03292 Sanity Checks for Saliency Maps Saliency methods have emerged as a popular tool to highlight features in an input deemed relevant for the prediction of a learned model. Several saliency methods have been proposed, often guided by visual appeal on image data. In this work, we propose an a arxiv.org 주요 내용 : 1) Saliency map은 학..

논문 제목 : Sanity checks for saliency maps 논문 주소 : arxiv.org/abs/1810.03292 Sanity Checks for Saliency Maps Saliency methods have emerged as a popular tool to highlight features in an input deemed relevant for the prediction of a learned model. Several saliency methods have been proposed, often guided by visual appeal on image data. In this work, we propose an a arxiv.org 주요 수식 정리: 0) Definition in..

SmoothGrad : removing noise by adding noise 내용 정리 [XAI-5]

SmoothGrad : removing noise by adding noise 내용 정리 [XAI-5]

논문 제목 : SmoothGrad : removing noise by adding noise 논문 주소 : arxiv.org/abs/1706.03825 SmoothGrad: removing noise by adding noise Explaining the output of a deep network remains a challenge. In the case of an image classifier, one type of explanation is to identify pixels that strongly influence the final decision. A starting point for this strategy is the gradient of the class score arxiv.org 주요 ..

Smooth Grad-CAM++ 내용 정리 [XAI-4]

Smooth Grad-CAM++ 내용 정리 [XAI-4]

논문 제목 : Smooth Grad-CAM++: An Enhanced Inference Level Visualization Technique for Deep Convolutional Neural Network Models 논문 주소 : arxiv.org/abs/1908.01224 Smooth Grad-CAM++: An Enhanced Inference Level Visualization Technique for Deep Convolutional Neural Network Models Gaining insight into how deep convolutional neural network models perform image classification and how to explain their outpu..

Grad-CAM++ 내용 정리 [XAI-3]

Grad-CAM++ 내용 정리 [XAI-3]

논문 제목 : Grad-CAM++: Generalized Gradient-based Visual Explanations for Deep Convolutional Networks 논문 주소 : arxiv.org/pdf/1710.11063.pdf IEEE WACV (2018, ieeexplore.ieee.org/document/8354201)에 나온 논문을 바탕으로 이해하고 내용을 작성한다. arixv에서 나온 버전이 좀 더 extended version임으로 Grad-CAM++에 더 깊은 이해를 위해서는 extended version을 읽는 것을 추천한다. 주요 내용 : 1) Deep models은 "black box"로서 internal function을 이해하는데에는 어려움이 있다. 이를 해결하기 위해..

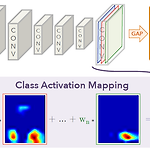

CAM (Class activation mapping) 내용 정리 [XAI-2]

CAM (Class activation mapping) 내용 정리 [XAI-2]

논문 제목 : Learning deep features for discriminative localization 논문 주소 : openaccess.thecvf.com/content_iccv_2017/html/Selvaraju_Grad-CAM_Visual_Explanations_ICCV_2017_paper.html ICCV 2017 Open Access Repository Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization Ramprasaath R. Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, Dhruv Batra; Pr..

Grad-CAM (Gradient-weighted class activation mapping) 내용 정리 [XAI-1]

Grad-CAM (Gradient-weighted class activation mapping) 내용 정리 [XAI-1]

논문 제목 : Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization 논문 주소 : openaccess.thecvf.com/content_iccv_2017/html/Selvaraju_Grad-CAM_Visual_Explanations_ICCV_2017_paper.html ICCV 2017 Open Access Repository Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization Ramprasaath R. Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, De..

activation functions In [1]: import matplotlib.pyplot as plt import numpy as np Step function¶ In [2]: def step(x): return 1*(x>0) In [3]: inputs = np.arange(-5,5,0.01) outputs = step(inputs) plt.figure(figsize=(8,5)) plt.plot(inputs,outputs,label='Step function') plt.hlines(0,-5,5) plt.vlines(0,0,1) plt.xlabel('input',fontsize=24) plt.ylabel('output',fontsize=24) plt.grid(alpha=0.3) plt.title("..

Meta-learning with Implicit Gradients [1] https://papers.nips.cc/paper/2019/hash/072b030ba126b2f4b2374f342be9ed44-Abstract.html IntroductionMeta-learning의 frame에서 bi-level optimization procedure는 다음으로 나누어진다.1) inner optimization : 주어진 task에 base learner가 학습하는 과정2) outer optimization : 여러 tasks 들에서 meta learner가 학습하는 과정MAML, DAML, Reptile 등의 방법이 optimization-based methods에 속한다. (Hands-on one-shot..