| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 |

- 시계열 분석

- python

- 메타러닝

- 설명가능한 인공지능

- SmoothGrad

- Interpretability

- Machine Learning

- 코딩테스트

- coding test

- Unsupervised learning

- Score-CAM

- 인공지능

- Cam

- Class activation map

- 설명가능한

- Explainable AI

- 코딩 테스트

- Deep learning

- 머신러닝

- 딥러닝

- AI

- 백준

- Artificial Intelligence

- xai

- meta-learning

- cs231n

- GAN

- 기계학습

- keras

- grad-cam

- Today

- Total

목록xai (20)

iMTE

CAMERAS: Enhanced Resolution And Sanity Preserving Class Activation Mapping For Image Saliency 내용 정리 [XAI-24]

CAMERAS: Enhanced Resolution And Sanity Preserving Class Activation Mapping For Image Saliency 내용 정리 [XAI-24]

논문 제목: CAMERAS: Enhanced Resolution And Sanity Preserving Class Activation Mapping For Image Saliency 논문 주소: https://arxiv.org/abs/2106.10649 CAMERAS: Enhanced Resolution And Sanity preserving Class Activation Mapping for image saliency Backpropagation image saliency aims at explaining model predictions by estimating model-centric importance of individual pixels in the input. However, class-inse..

Revisiting The Evaluation of Class Activation Mapping for Explainability: A Novel Metric and Experimental Analysis 내용 정리 [XAI-23]

Revisiting The Evaluation of Class Activation Mapping for Explainability: A Novel Metric and Experimental Analysis 내용 정리 [XAI-23]

논문 제목: Revisiting The Evaluation of Class Activation Mapping for Explainability: A Novel Metric and Experimental Analysis 논문 주소: https://openaccess.thecvf.com/content/CVPR2021W/RCV/html/Poppi_Revisiting_the_Evaluation_of_Class_Activation_Mapping_for_Explainability_A_CVPRW_2021_paper.html CVPR 2021 Open Access Repository Revisiting the Evaluation of Class Activation Mapping for Explainability: A ..

Towards Better Explanations of Class Activation Mapping 내용 정리 [XAI-22]

Towards Better Explanations of Class Activation Mapping 내용 정리 [XAI-22]

논문 제목 : Towards Better Explanations of Class Activation Mapping 논문 주소 : https://arxiv.org/abs/2102.05228 Towards Better Explanations of Class Activation Mapping Increasing demands for understanding the internal behavior of convolutional neural networks (CNNs) have led to remarkable improvements in explanation methods. Particularly, several class activation mapping (CAM) based methods, which gene..

Towards Learning Spatially Discriminative Feature Representation 내용 정리 [XAI-21]

Towards Learning Spatially Discriminative Feature Representation 내용 정리 [XAI-21]

논문 제목 : Towards Learning Spatially Discriminative Feature Representation 논문 주소 : https://arxiv.org/abs/2109.01359 Towards Learning Spatially Discriminative Feature Representations The backbone of traditional CNN classifier is generally considered as a feature extractor, followed by a linear layer which performs the classification. We propose a novel loss function, termed as CAM-loss, to constrai..

Informative Class Activation Maps 내용 정리 [XAI-20]

Informative Class Activation Maps 내용 정리 [XAI-20]

논문 제목 : Informative Class Activation Maps 논문 주소 : https://arxiv.org/abs/2106.10472 Informative Class Activation Maps We study how to evaluate the quantitative information content of a region within an image for a particular label. To this end, we bridge class activation maps with information theory. We develop an informative class activation map (infoCAM). Given a classi arxiv.org 주요 내용 정리: 1) 저..

Eigen-CAM: Class Activation Map Using Principal Components 내용 정리 [XAI-19]

Eigen-CAM: Class Activation Map Using Principal Components 내용 정리 [XAI-19]

논문 제목 : Eigen-CAM: Class Activation Map Using Principal Components 논문 주소 : https://arxiv.org/abs/2008.00299 Eigen-CAM: Class Activation Map using Principal Components Deep neural networks are ubiquitous due to the ease of developing models and their influence on other domains. At the heart of this progress is convolutional neural networks (CNNs) that are capable of learning representations or fe..

Combinational Class Activation Maps for Weakly Supervised Object Localization 내용 정리 [XAI-18]

Combinational Class Activation Maps for Weakly Supervised Object Localization 내용 정리 [XAI-18]

논문 제목 : Combinational Class Activation Maps for Weakly Supervised Object Localization 논문 주소 : https://openaccess.thecvf.com/content_WACV_2020/html/Yang_Combinational_Class_Activation_Maps_for_Weakly_Supervised_Object_Localization_WACV_2020_paper.html WACV 2020 Open Access Repository Seunghan Yang, Yoonhyung Kim, Youngeun Kim, Changick Kim; Proceedings of the IEEE/CVF Winter Conference on Applica..

How to Manipulate CNNs to Make Them Lie: the GradCAM Case 내용 정리 [XAI-16]

How to Manipulate CNNs to Make Them Lie: the GradCAM Case 내용 정리 [XAI-16]

논문 제목 : How to Manipulate CNNs to Make Them Lie: the GradCAM Case 논문 주소 : https://arxiv.org/abs/1907.10901 How to Manipulate CNNs to Make Them Lie: the GradCAM Case Recently many methods have been introduced to explain CNN decisions. However, it has been shown that some methods can be sensitive to manipulation of the input. We continue this line of work and investigate the explanation method Gra..

Axiom-based Grad-CAM: Towards Accurate Visualization and Explanation of CNNs 내용 정리 [XAI-15]

Axiom-based Grad-CAM: Towards Accurate Visualization and Explanation of CNNs 내용 정리 [XAI-15]

논문 제목 : Axiom-based Grad-CAM: Towards Accurate Visualization and Explanation of CNNs 논문 주소 : https://arxiv.org/abs/2008.02312 Axiom-based Grad-CAM: Towards Accurate Visualization and Explanation of CNNs To have a better understanding and usage of Convolution Neural Networks (CNNs), the visualization and interpretation of CNNs has attracted increasing attention in recent years. In particular, sev..

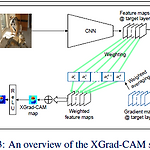

Grad-CAM: Why did you say that? 내용 정리 [XAI-14]

Grad-CAM: Why did you say that? 내용 정리 [XAI-14]

논문 제목 : Grad-CAM: Why did you say that? 논문 주소 : https://arxiv.org/abs/1611.07450 Grad-CAM: Why did you say that? We propose a technique for making Convolutional Neural Network (CNN)-based models more transparent by visualizing input regions that are 'important' for predictions -- or visual explanations. Our approach, called Gradient-weighted Class Activation Mapping arxiv.org 주요 내용 정리: 1) Grad-C..